AI Car Damage Detection: How it works

Explore how AI is changing vehicle inspections and car damage detection across insurance, car rental/remarketing, and fleet management industries by enhancing damage detection accuracy and efficiency.

Vehicle inspections (for damage detection or general health checks) have traditionally been a manual process, with humans required to inspect and analyse vehicle conditions.

With technology evolving, we are now capable of vehicle inspection and damage detection using AI, making the process more efficient, time-saving, and cost-effective while also reducing the chances of error and fraud.

(Also read: The evolution of vehicle inspections - exploring the past, present, and future)

How capable is AI-based vehicle Damage Detection, and how does it work? How do you ensure high accuracy and tackle the challenges involved in the process?

Let's dive into the details in this blog.

Types of Car Damage

Car damage occurring after an accident or impact is mostly cosmetic or structural. While detecting these damages is easy, there are some categories that aren’t easily noticeable.

The damage impact and extent depends on how the car’s body came into contact with the external factors that caused it.

There are three primary categories of vehicle damage based on the component impacted - Metal Damage, Glass Damage, and Miscellaneous damage.

Metal Damage

This category of vehicle damage involves the metal parts of its body (bumper, hood, doors, boot, or other metal parts) and can be categorized as dents, scratches, or tears.

What are dents?

Vehicle damage that occurs when the impact leads to the metal parts getting pressed towards the inside, causing a concavity on the surface, is called a dent. This type of metal damage is quite common during crashes.

What are scratches?

This is the most common type of vehicle damage that occurs due to metallic parts rubbing against another hard surface, removing a layer of paint in the process.

What are tears?

When the impact on a car causes the metal parts to split into two, its called a tear on the car’s surface. This type of car damage occurs either at the edge of the car part or inside it.

Glass Damage

Glass damages are impacts occurring on the glass parts of the car’s body, such as windshield, back glass, window, headlights, and tail lights. This type of car damage can be further classified into cracks, chips, spider cracks, and large-range glass damage.

What is a crack?

A crack, also known as a glass fracture, is a structural break on a vehicle’s windshield or window. This type of car damage can result in major safety hazards because even a small crack can compromise the glass’s integrity, reducing its ability to absorb and distribute impact, which in turn increases the risk of injury during accidents.

What is a chip?

A chip AKA a stone chip or pit is superficial damage to a car's glass surface caused by small stones or debris hitting the outer layer of the glass, causing a small indentation. This damage can vary in size based on the force of impact and the object's shape.

What is a spider crack?

A spider crack is a specific type of car glass damage that starts from a central point of impact and extends outwards with multiple cracks, resembling a spider web. This type of car damage affects the structural integrity of the glass and also obstructs visibility, which has the risk of growing rapidly if not treated at the right time.

What is large-range glass damage?

Large-range glass damage occurs when a major area of the car windshield or window is fractured or shattered due to impact. The only solution is to replace the damaged glass to ensure both visibility and structural safety.

Miscellaneous Damage

The third category of vehicle damage that does not include metal or glass damage can be specified as miscellaneous damage. For example - a gap between car parts, dislocation, etc.

Models used in AI Car Damage Detection

Now that we have a better understanding of the types of car damage, let's dive into how AI-based damage detection reads a vehicle's photos and videos using different models.

There are a variety of machine learning models we can use for vehicle damage detection, depending on the task. The most common ones used are

- Object detection model

- Segmentation model

Object Detection Model

Object detection is a primary task in the field of computer vision in machine learning, which uses deep learning and its advances as the primary algorithm to detect car damage through an image fed to it.

Some of the most common object detection models include YOLO (You Only Look Once), EfficientDet, and Faster R-CNN, which provide high accuracy and can detect multiple types of car damages in an image.

There are a ton of networks that have been released in publications that we can utilize to tackle different problem statements in vehicle damage detection. These networks can be modified by changing the backbone used to extract these features. Each backbone has a different network complexity and processing time required to extract different features.

To see the best results from different networks, we first need to identify the right problem statements and then choose the network best suited for those problems.

Segmentation Model

Segmentation models in machine learning are often used to partition or classify data into distinct segments or groups. They divide an image into meaningful parts or regions by assigning labels to each pixel.

This model typically relies on supervised learning. In vehicle damage detection, the model would learn to associate certain pixel patterns with specific objects by being trained on images with labeled regions.

We often use advanced models like Vision Transformers for this task. The ideal type of vision transformer model depends on specific needs, such as the level of accuracy or the complexity of the damage.

Common challenges in AI Car Damage Detection

While there are different models that can be used for AI-powered damage detection, we also need to keep in mind that these AI models are prone to errors, which can result in false positives or false negatives in vehicle damage reports.

- False positives are the error cases where the algorithm falsely identifies vehicle damage when none exists.

- False negatives are cases where the AI fails to detect vehicle damage even when the damage exists.

Some of the most common challenges that AI models face during damage detection are -

Reflective surface of the vehicle

Shiny or metallic surfaces are very prone to reflections, and this can often cause the AI model to misinterpret them as defects on the surface of the vehicle, resulting in false positives. For example - light bouncing off a car's curved surface might look like a dent or a scratch, which will in turn result in incorrect detections.

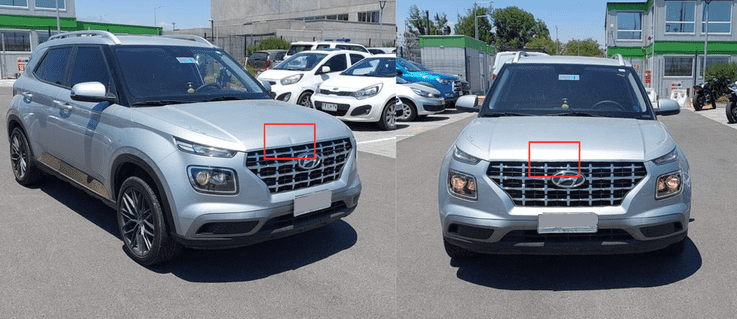

Perspective variations

While some vehicle damages might be easy to detect from a certain angle, the same damage might be invisible on camera from a different view. Similarly, it is also possible for a certain light effect to be mistaken as a dent or a crack when viewed from a certain angle.

Lighting issues (shadows and low light)

Poor lighting or shadows on the vehicle can either hide damage on a surface or make the surface look damaged. In the case of low light, capturing dents or scratches proves to be even more challenging, leading to false negatives, while shadows or uneven lighting can be mistaken as damage, resulting in false positives.

Damage overlap

In some cases, multiple damages might overlap, like a scratch covering a dent. The visibility of the dent may be reduced because the scratch distracts the algorithm (or in some cases, even the human eye) making it harder to detect the underlying damage.

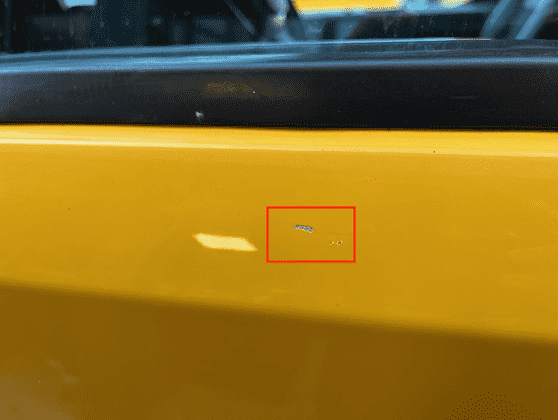

Micro damages

Micro damages are tiny scuffs and indentations on the car's surface that aren't as easy to detect because of their size. In many cases, they might go undetected even during human inspections.

How to overcome these challenges

AI-powered Car Damage Detection isn’t easy, and comes with a ton of challenges.

However, working around these challenges to improve efficiency can be achieved with some basic steps. Some of them include -

- Multi-angle imaging captures vehicle damage from different viewpoints, giving the AI model varied perspectives of the damage. This results in fewer false positives or negatives caused by reflections and perspective variations.

- To avoid shadows and low-light issues, shadow detection and processing techniques are applied to remove shadows from the images. If there is excessive darkness or shadows, the model is trained to flag the images and exclude them from the damage detection process to avoid false positives or negatives.

- Damage overlap is a rare scenario and is less frequent. To avoid this, specific visuals are collected for such cases, which are later used as data points to train the AI model to identify these cases where they occur.

- Micro damages can be captured by ensuring you capture the entirety of the car for better quality analysis. Additionally, focusing on using a good camera, picking the right location, lighting, and background, and framing them the right way is the way to go.

(Also read: Guidelines for capturing micro damages through photos/videos of a damaged vehicle)

Which model works better for AI Car Damage Detection?

Both object detection and segmentation models come with their own set of pros and cons. The efficiency of both models (as discussed earlier) is purely based on the scenario in which it is being used. However, both models will always pose the challenge of reporting false positives or negatives in certain scenarios.

The ideal solution to avoid this (also the best model for AI-powered damage detection) would be to use both models together.

When we combine both models, we get an ensemble of models that combine the strengths of both models, covering up the drawbacks of each other and hence, resulting in fewer errors in the final prediction during AI-powered Damage Detection.

To better understand how this works, let's examine the models in action for detecting scratches and glass cracks.

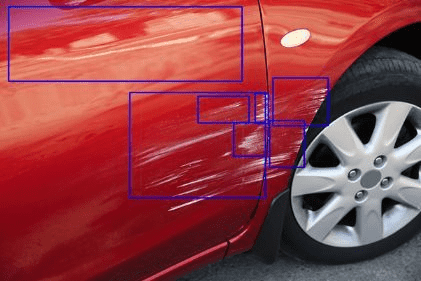

Scratch detection

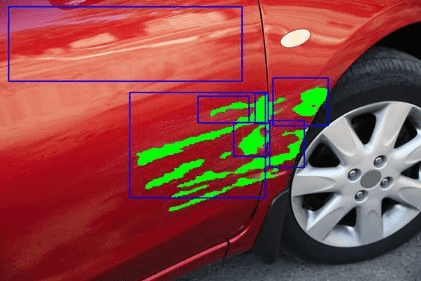

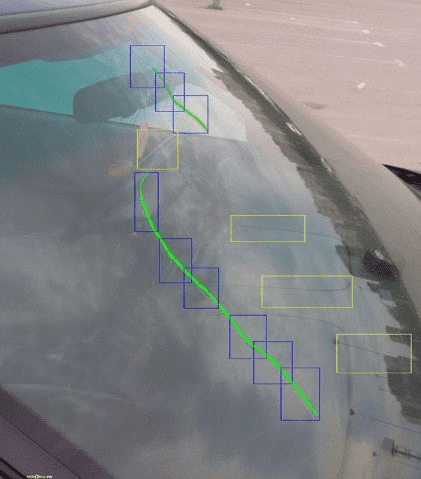

Check out the image below, in which the results of scratch detection were generated using only the object detection method.

The bottom detections are accurate, but the top detection is a false positive. Here, the model confuses the reflection for a scratch, leading to a wrong result.

In some cases, the false positives can outnumber the actual scratches, and the model may suggest that the car is severely damaged when it isn't.

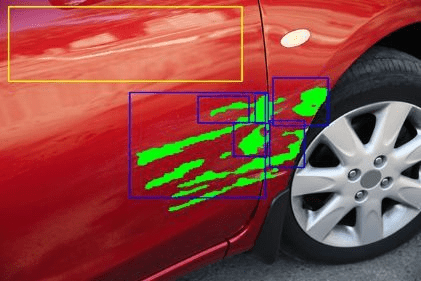

Let's look at the same with the segmentation model overlaid on the current image.

Here, the model successfully localized the actual scratch damage with high precision despite there being a reflection on the car (the green patches highlight the prediction generated using the segmentation model).

Now, let's combine both models and build an ensemble between them.

As you can see, the boxes with overlapping segmentation pixels (here in green) are accurate predictions, while empty boxes are inaccurate.

From this, we can segregate the boxes into two categories—one that overlaps with the segmentation pixels (in blue) and the other with empty boxes (in yellow). We can then train the AI model to accept the blue boxes (with overlap) and reject the yellow ones (without overlap), thereby predicting vehicle damage more accurately and reliably.

Glass crack detection

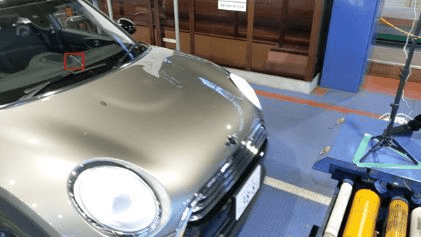

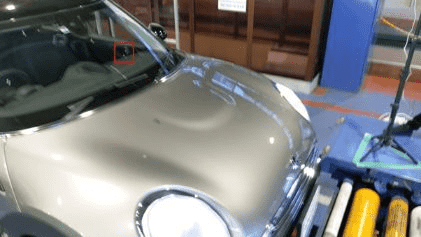

Since glass is inherently highly reflective, using the object detection model can pose a bigger challenge in vehicle damage detection. This is where the ensemble technique we used earlier proves its worth.

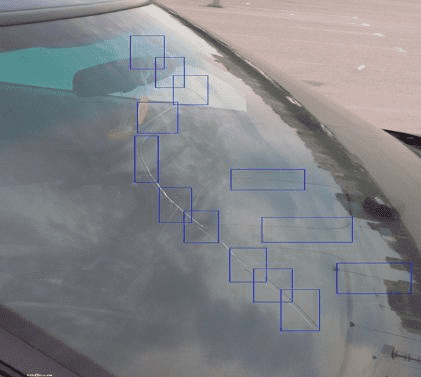

Let's see how this model works to detect glass cracks using the image below.

Here are the results we get by running the object detection model on it.

As we can very clearly see, the object detection model, in addition to the cracks present, has also classified the reflections of the poles as glass cracks, i.e. several false positives were generated.

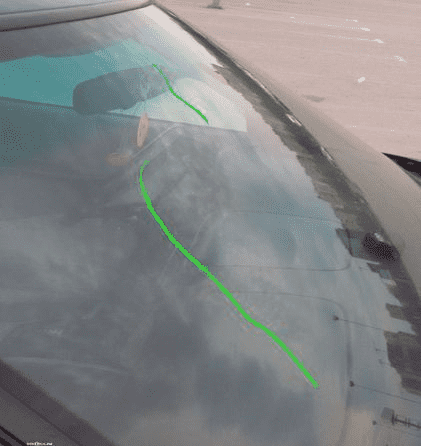

Now, when we run the same through a segmentation model for analysis, we can see that it accurately predicts the crack damage visible in the image.

This prediction will be used in combination with the object detection model results to generate the final ensemble prediction.

After the segregation of the two categories of boxes (blue for overlaps and yellow for no overlaps), we will finalize only the overlapping ones.

The final ensemble prediction (as you can see) is highly accurate, with no false positives. This technique demonstrates the power of ensembling the two models and their impact on metrics and accuracy.

Importance of labelling and data quality for AI Damage Detection models

Labelling is a very important step to help improve the accuracy of your AI Damage Detection model. This is crucial because this data is used to "train" the model to recognize patterns and make predictions based on it.

Labelling, put simply, is the process of assigning specific tags or annotations to data (in this case, images and videos of different kinds of vehicle damage). Using precise labels ensures that the AI model learns correctly from the data being fed.

For example, when labelling a scratch, it is important to use multiple smaller bounding boxes that closely fit the damage's shape rather than one or two larger boxes that cover more area than necessary.

Additionally, data selection and analysis also play a very important role in improving the accuracy of the vehicle damage detection model. Analysing the data helps us identify where the model is struggling so we can focus on improving training with more of those specific cases.

Both precise labelling and thoughtful data selection are important for training high-performing damage detection models.

AI Car Damage Detection via tracking method

In many cases, we've trained the model on a certain amount of data, but the data we've fed the model is still scarce. This could happen because of a lack of images or irregularities in labelling the images used.

In such cases, we often end up with a model that is not robust enough and is prone to reporting false positives or false negatives during vehicle damage detection.

However, we can still detect damage by switching from images to videos of the car damage. This method involves video analysis and tracking objects (here, vehicle damage) as a solution, which uses information from the neighbouring frames to accurately predict the damage and its correct location.

Let's test this method on a cracked windshield.

Let's break this down frame by frame.

In the video used above, we can see the car moving from left to right, and a red box on the windshield identifies the prediction of the crack detection model. As you can see, the model detects the crack accurately 5 out of 7 times except for frame 3 and frame 7 (which are false positives).

Tracking the red box and its position using different tracking algorithms can help us determine the box in future frames. The frequency and placement of the tracked boxes can help differentiate between true positives and false positives.

Metrics used for building an AI Car Damage Detection Model

When building any machine learning or deep learning model for Vehicle Damage Detection, it is very important to set a metric that determines how well the model will perform in the test data. Evaluating the model's performance based on these metrics and picking the best results is what helps us train and improve the accuracy of an AI Car Damage Detection model.

We focus on two main metrics based on the two models we discussed earlier (object detection and segmentation).

mAP for Object detection model

mAP of Mean Average Precision is a performance metric used to evaluate how well a model detects objects (in this case, vehicle damage) in images, giving you an idea of how accurate and thorough the model is at its job.

Imagine you have a model that's trying to find vehicle damage like dents or scratches in a set of images given to it. The mAP tells you how well it did the job based on two parameters -

- Precision: How many of the detected images were actually correct (the model didn't mistake something else for damage)

- Recall: How much real damage did the model actually find (it didn't miss any)?

The mAP score combines both of these parameters and gives you a number (between 0 and 1 or 0% to 100%) that reflects how well the model performed overall. A higher score means the model is more accurate in detecting damage, and vice versa.

IoU for Segmentation model

IoU is a metric that computes the ratio of intersection between the ground truth reference box and the predicted box of the model to the union of two boxes, essentially telling you how much overlap the boxes have with each other.

The IoU is assigned a score between 0 and 1 based on the amount of overlap, where 0 means no overlap and 1 means complete overlap.

This is then compared to a threshold score, which is the opposite of the IoU score, i.e. 1 means no overlap, and 0 means complete overlap.

Based on this comparison, the model builds these inferences:

- IoU ≥ threshold: The detection is correct, i.e. a true positive (TP)

- IoU < threshold: The detection is incorrect, i.e. a false positive (FP)

- If no box is predicted, it is a false negative (FN)

Based on this formula, we can then determine the model's precision and recall, which in turn will help us determine the model's mAP, which dictates its accuracy.

There is another metric for the evaluation and comparison of models. It's called the F1 score.

This is the harmonic mean of precision and recall and can be computed using:

The results from this formula help us evaluate and compare models and finally pick the ones that perform the best.

Conclusion

As time passes and technology advances, we must move to smarter vehicle inspection methods, including AI and automation, which have their own benefits.

(Also read: Humans vs. AI inspections: A comparison across 7 parameters)

While there are many models we can use for AI Car Damage Detection, it is necessary to know that each model has its own set of drawbacks and picking the right ones based on different situations is crucial to ensure the right results.

Want to explore how you can automate vehicle inspections and improve efficiency at your company? Book a demo with Inspektlabs to learn more.