Vehicle part detection using AI for vehicle damage and repair estimation | Inspektlabs

Our AI based vehicle damage detection and repair estimation solutions provide our customers with the most comprehensive inspection reports while keeping the turnaround time to a minimum. Visit to learn more!

The body of a car has a large number of parts – each varying in their shapes, sizes, position and material. From windshield to back bumpers, damages can appear on a variety of car parts.

Depending on the type and severity of the damage, a particular part may have to be repaired or replaced. Since every car part has its own pricing, it becomes essential to properly identify the part, and thereby localize the damage e.g. front bumper-scratch.

Having a timely & accurate report of the type, severity and location of damages on a vehicle is becoming crucial for many automotive and insurance players such as car resellers, insurance players and car auction players.

Why Inspektlabs?

Our AI based vehicle damage detection and repair estimation solutions provide our customers with the most comprehensive inspection reports while keeping the turnaround time to a minimum.

To do this, our AI damage detection models detect a wide variety of damages - easily noticeable damages such as glass shatters and dents to minor damages such as scratches and line cracks.

However, such models are rarely useful alone. Complementing these models are our AI car part identification models. Both of these models work in conjunction to detect and localize the position, type and severity of damages on a car and generate an inspection report accordingly.

Part Identification:

In this blog, we will talk about our AI models for car part identification and the challenges that we faced while training them. Broadly speaking, car part identification can be done in two ways - part localization or part segmentation.

Part localization means to draw a best fit bounding box around the part in the image, whereas part segmentation means to identify and classify every pixel of the image, i.e. find the class (in this case, the part) to which a particular pixel belongs.

At Inspektlabs, we have trained models for both part localization and part Segmentation and use them in different scenarios. In this blog however, we will focus on Car Part Segmentation, since it’s more complicated and challenging.

List of Car Parts:

For this blog, we would use only the following list of car parts, wherein every pixel of the image would be mapped to each part. Our current product covers 2x parts listed below, including minor parts such as spoilers.

- Front Bumper

- Back Bumper

- Front Door

- Back Door

- Dicky

- Front Glass

- Back Glass

- Fender

- Quarter Panel

- Hood

- Window Glass

- Taillight

- Rear Reflector

- Fuel Door

- Running Board

- Pillar

- Side View Mirror

- Bumper Grill Top

- Bumper Grill Bottom

- Headlight

- Fog Light

- Wheel

- Wheel Rim

- License Plate

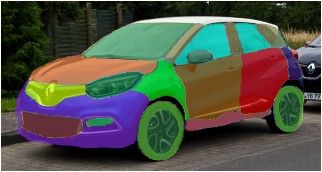

Figure 1:

Top – Side view of a car

Bottom – Our model’s output colour-coded for each part

In the above example, you can see how our AI model is able to confidently follow the edges of each individual part of a car and segment them out. Having this segmentation mask allows us to superimpose our AI damage detection results on the image and accurately identify the location of each damage.

Challenges in Car Part Identification:

Identifying individual car parts accurately in an image is a challenging problem. There are countless factors that we have to take into consideration - from the shape, colour and type of a vehicle to the camera resolution and exposure, even to the lighting conditions in which the image is taken.

Most of these factors are outside our control and as such, the segmentation models that we have to train must be robust enough to overcome these difficulties. We, at Inspektlabs, always strive to provide our customers with the best AI based damage detection and claim estimation solutions.

And to do this, our AI models have to be continuously iterated upon - identifying points of failure and solving them.Below we have discussed a few of the major challenges that we faced while creating and training our AI car part identification model.

Dealing with Non-Car vehicles:

Vehicles come in different shapes and sizes, especially if we consider vans whose structures are quite different from that of cars. One, they may not even have a rear door.

Secondly, even if they have a rear door, its shape is very different compared to that of a traditional car. Likewise, quarter panels of vans are also structurally different. As a result, for reasons just mentioned, it becomes extremely difficult for an AI model to generalise well to vans and other different vehicles such as trucks and caravans.

Figure 2:

Top – Rear Isometric perspective of a van

Bottom – Our part model’s colour-coded output

However, when we look at Vans from a front or front-isometric view, they look quite similar to cars, i.e. the shape and sizes of parts are not so different from that of cars. As a result, the AI model is better able to predict the van parts in such scenarios.

Figure 3:

Top – Front Isometric perspective of a van

Bottom – Our part model’s colour-coded output

You can see how the hood, headlights, front bumper, bumper grills, fender, front glass and even the front door are positioned quite similar to a car. This allows our model to better generalize to this image and produce acceptable results.

Cars that only have single doors on each side:

Generally, we expect cars to have a front door and rear door on both sides. However, there exist many cars (mainly sports and exotic cars) that have a single door on each side.

What should such a door be called? Front? Rear? Even if we decide on a particular convention, it is difficult to accurately identify even for a human what type of door it is without being able to look at the entire car. That is, we need to draw context from other parts of the car in the image to classify the pixels of that door.

Figure 4:

Top – Car with single (front) door

Bottom – Model’s colour-coded output

As you can see, the model struggles to properly segment out the door and assign it to a single class. When looking from a front isometric view, generally, the order of parts are Fender, Front Door, Rear Door and Quarter Panel.

As you can see, the model has learnt this order and tries to predict accordingly, thus mistakenly predicting the Quarter Panel of the car as Rear Door. That is, the model sometimes fails when it comes across such outlier cases.

We tried to tackle this problem mainly by incorporating more such cases in our dataset, even going through the tedious process of handpicking images that we deemed to be the most appropriate. This allowed us to get better results as showcased in the images below:-

Figure 5:

Top – Another car with single (front) door

Bottom – Model’s colour-coded output

Dealing with very zoomed-in cases, where it becomes difficult to identify a part independently

When looking at a zoomed-in image, even humans may find it difficult to identify a part directly and instead try to derive context from the surrounding parts. This is applicable for an AI model as well, and therefore, accurate identification of parts in very zoomed-in cases where one or maybe two parts are visible becomes extremely challenging.

To tackle this issue, we had to oversample such cases in our dataset and handpick certain challenging orientations. After quite a lot of iterations, we finally managed to achieve some acceptable results:

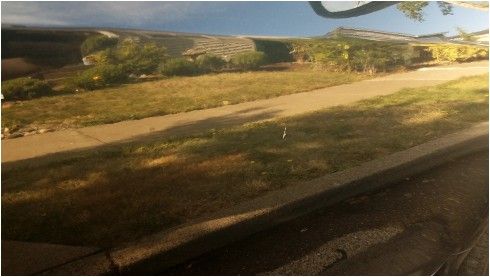

Figure 6:

Top – Zoomed-in view of the front bumper of a car

Bottom – Model’s colour-coded output

However, our model continues to fail in certain cases – cases in which even a human might give incorrect results. One such example is shown below:

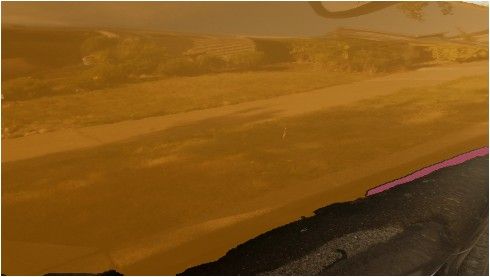

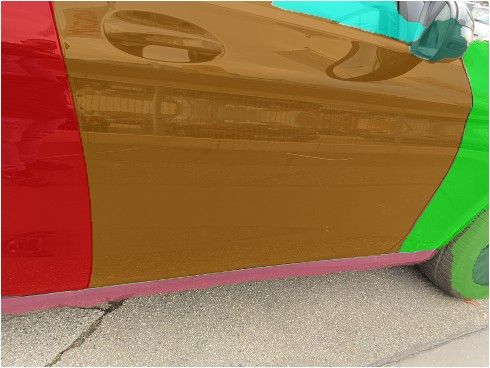

Figure 7:

Top – Extremely zoomed-in view of the front door of a highly reflective car

Bottom – Model’s colour coded output

Dealing with highly reflective cars:

When cars have glossy or metallic surfaces, reflections are rampant. In Computer Vision, we use Convolutional Neural Nets which are made up of Convolutional layers.

Conv Layers, if described in the simplest of the terms, are basically edge (and pattern) detectors. So, when there are many reflections on the surface of a car, the number of edges increases significantly, however, most of the edges are not a part of the car itself, but rather the reflections, i.e., they can be thought of as noise. Noise that will hamper an AI model from performing to its fullest extent.

Figure 8:

Top – Side view of the front door of a reflective car

Bottom – Model’s colour-coded output

Figure 9:

Top – Side view of the rear door - quarter panel section of a reflective car

Bottom – Model’s colour-coded output

Properly identifying Minor Parts:

We consider Minor Parts as:

- Rear Reflector

- Fuel Door

- Side View Mirror

- Bumper Grill Top

- Bumper Grill Bottom

- Fog Light

- License Plate

And the remaining as Major parts.

Minor Parts that are generally smaller in size and tend to overlap with the positions of the Major parts. Moreover, minor parts greatly vary in their shape and position on a car compared to major parts. As a result, it is quite difficult to accurately localize minor parts of a car.

Generally, an AI model tends to be biased towards classes that are easy to predict or classes that have a high frequency of occurrence in the dataset. Minor parts satisfy neither of these conditions and as a result, during our initial runs, we noticed that our model for eg, managed to correctly segment the Hood, HeadLight and Bumper but failed to predict the Fog Lights and Bumper Grills top and bottom.

In order to tackle this, we changed a component of our loss function from Cross Entropy to Focal Loss. Focal Loss improves over the former by giving more weightage to hard examples (in our case, the minor parts) and down-weights the easy examples (in our case, the major parts).

In short, Focal Loss penalises unconfident predictions and tends to not push values to the edges of [0,1] range unlike Cross Entropy. This is what we want in our case, mainly because we need to deal with undersampled classes and get their predictions away from the 0.5 score midpoint, instead of focusing on getting the scores of major parts to the 0 and 1 edges.

Secondly, we moved away from a single-label multi-class problem to a multi-label multi-class problem. This allowed us to manually finetune a threshold for each part, thus we were able to use a lower threshold for minor parts compared to major parts.

Having such fine grained control on our model prediction and processing stage enabled us to generate an overlay of all the major parts first and then we superimpose on it an overlay of the minor parts. We were thus able to get some pretty good results:

Figure 10:

Top - Front Isometric view of car

Bottom - Model’s colour-coded output (with the minor parts)

Figure 11:

Top - Front view of car

Bottom - Model’s colour-coded output (with the minor parts)

Conclusion

Accurately localizing every individual part of a car is an extremely challenging and interesting problem.

We have been able to achieve highly accurate results with deep learning by iteratively solving for real world problems e.g., highly zoomed-in images, single door cars etc.

Inspektlabs is positioned in the insurance and automotive industry as one of the leading AI based service providers, providing motor insurers, car rental, and used car players with the latest technological solutions powered by AI and Computer Vision.

Inspektlabs’ state-of-the-art AI damage detection, car part identification and background removal solutions have been designed to provide its customers with highly accurate results while keeping the turnaround time to a minimum.