Did OpenAI make Car Insurance Inspection Fraud easier?

With OpenAI’s latest updates to GPT-4o’s image generation capabilities, a new concern is rising in the auto insurance world - Has AI made car insurance fraud easier? And more importantly, can insurers still trust the integrity of images submitted during a car insurance inspection?

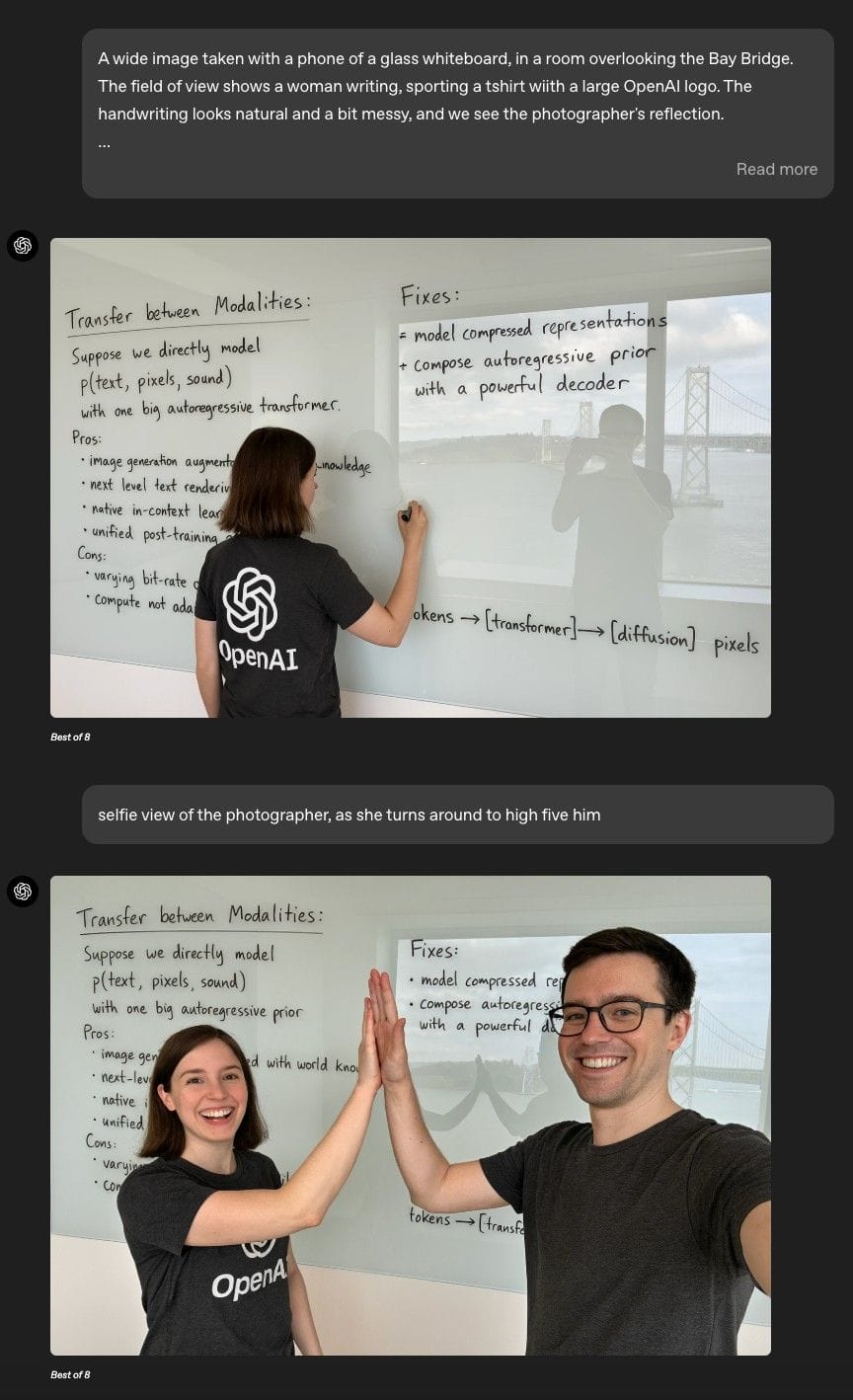

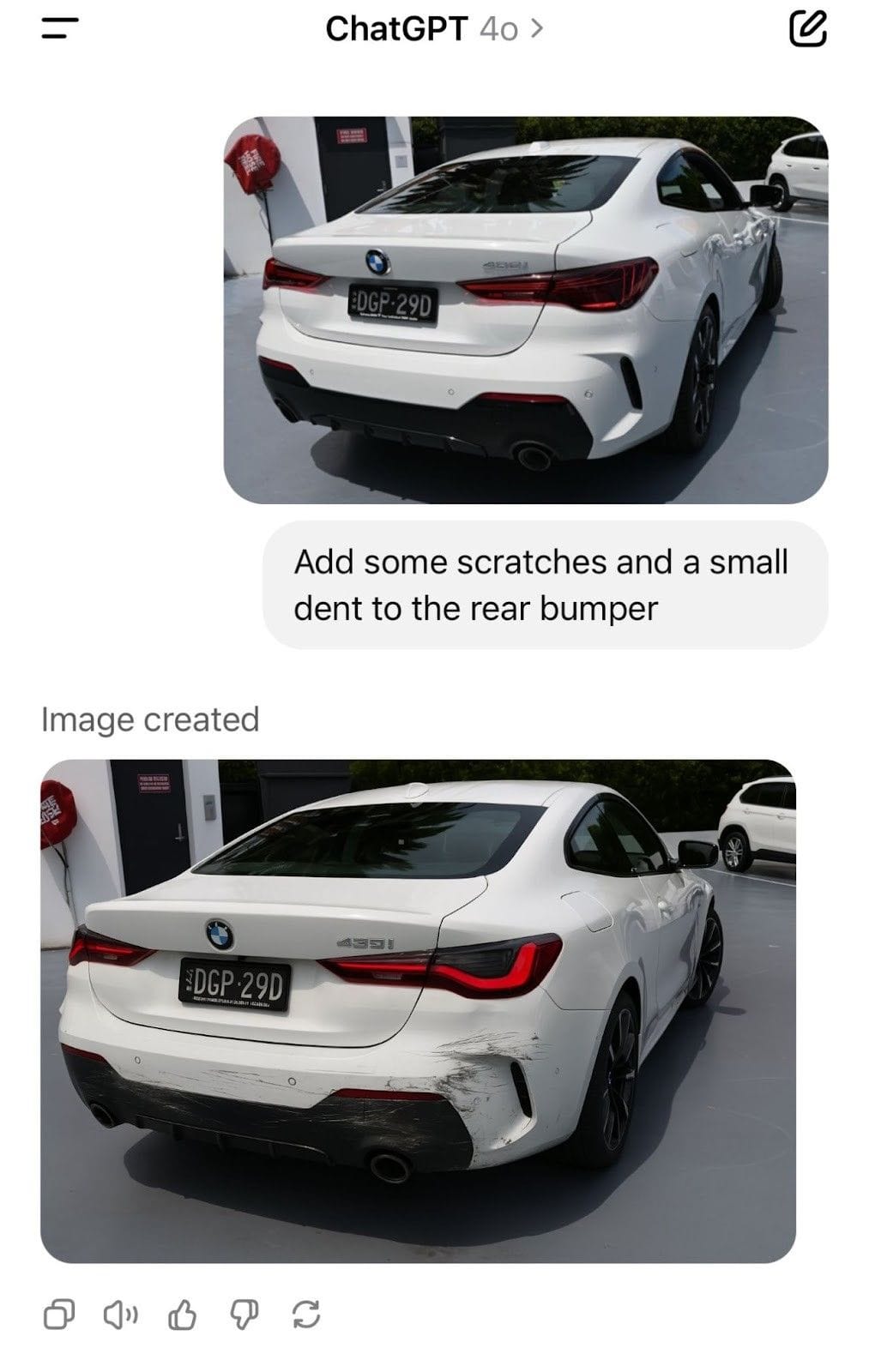

Open AI’s latest release introduces an advanced image generation model that significantly outperforms its predecessor, DALL-E 3. The result? Hyper-realistic images created from simple text prompts that are indistinguishable from real photos. Here’s an example -

Yes, these are AI-generated images. And they look strikingly real! (Read more).

This poses are critical question for Car insurance inspections - Could users now generate fake vehicle damage photos and submit them as part of fraudulent claims?

Linas Beliūnas raised the same query on LinkedIn a few days ago. Honestly, the results generated from the prompts are quite impressive.

Given that Insurance fraud costs U.S. insurers more than $80 Billion annually, the threat of AI-generated fake images is very real.

But there’s good news.

How Inspektlabs Protects Against Fraud in Car Insurance Inspection

At Inspektlabs, we’re already one step ahead. Our AI-powered vehicle inspection tool was built with fraud-prevention at its core, and it’s well-equipped to address this evolving challenge.

Real-time image & video capture only

The first (and most effective) defense against AI-generated fraud is to prevent users from uploading edited or fabricated images.

That’s why our platform mandates direct capture only. With Inspektlabs’ vehicle inspection tool, users must take photos or videos of the vehicle damage in real time during the car insurance inspection. No previously saved or AI-generated content can be used.

So even if someone creates fake damage images using AI, our system makes it impossible to submit them.

Inspektlabs AI comes with Playback detection

But what if someone tries to “trick” the system by recording a video of a screen displaying a damaged vehicle?

Our AI model can detect that too.

Using Playback detection capabilities, our solution identifies telltale signs of screen captures or pre-recorded media. These cases are flagged automatically as fraud attempts, which is a significant advancement powered by our Insurance claims AI.

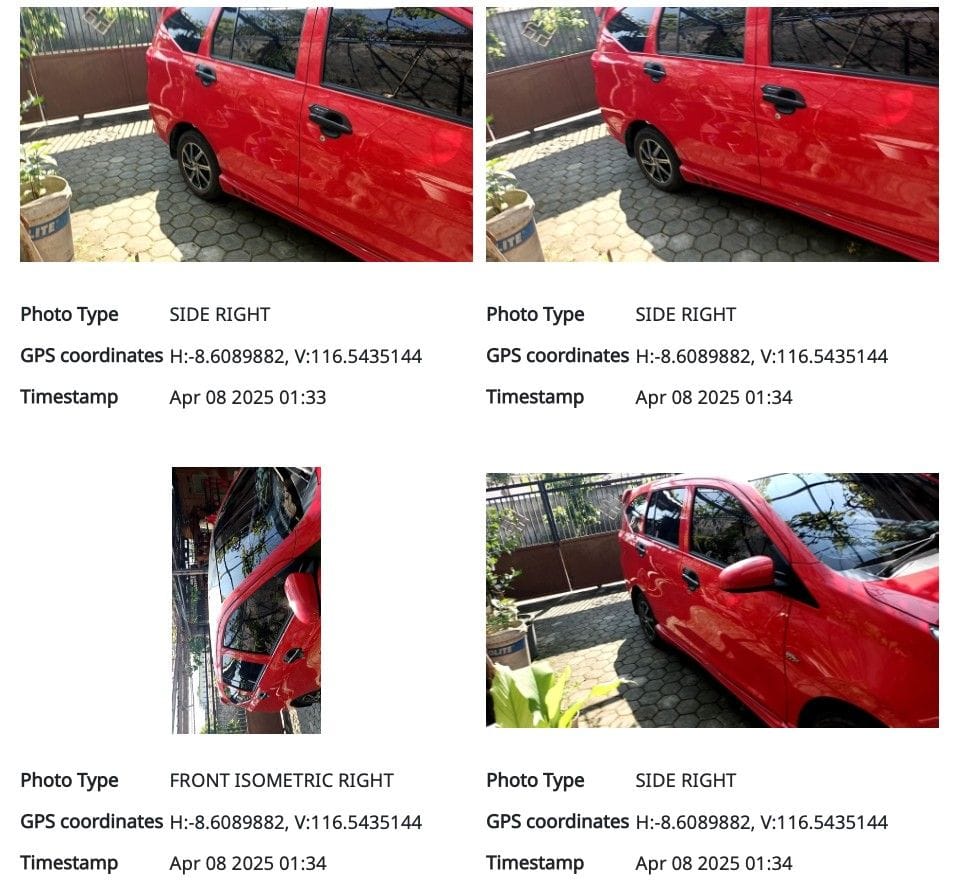

Tamper-proof Metadata Analysis

In addition to real-time capture, Inspektlabs tracks and analyzes image metadata i.e. details that AI-generated media can’t reliably spoof.

This includes GPS coordinates, Timestamp data, device ID & camera information, and media encoding details.

Our Insurance Claims AI cross-references this data with image content to ensure authenticity. If anything seems off, it’s flagged for manual review or automatically rejected.

This ensures a secure, verifiable car insurance inspection process, soemething that most traditional methods lack.

Also read - Fraud detection using AI for car damage assessment by Inspektlabs

There’s more innovation in progress at Inspektlabs

We’re also investing in training deep learning models, including CNNs and Vision Transformers (ViTs), to detect AI-generated vs. real images (Meta is building similar detection systems to protect content integrity on social media platforms).

This future-proofing ensures our platform can adapt, even in workflows where clients allow image uploads.

As the nature of fraud changes, we’re committed to staying one step ahead, and enabling insurers to confidently assess car damage without falling prey to sophisticated manipulation.

The future of car insurance inspections

OpenAI’s image generation advancements are remarkable, but they also present new challenges, especially in fields like car insurance inspections where visual authenticity is very important.

With comprehensive solutions like real-time damage capture, playback detection, metadata tracking, and ongoing innovation in deepfake detection, Inspekltabs is ensuring that the future of vehicle inspections remain secure.

We’re not just identifying fake claims, we’re empowering insurers to take action with confidence using cutting-edge vehicle inspection tools and insurance claims AI.

Stay tuned for more breakthroughs as we continue building the most advanced, fraud-resistent tools to help insurers accurately assess car damage and streamline the inspection process.